Classical Tests

A. Two samples

The classical tests for two samples include:

- comparing two variances (Fisher's F test:

var.test()) - comparing two sample means with normal errors (Student's t test:

t.test()) - comparing two means with non-normal errors (Wilcoxon's rank test:

wilcox.test()) - comparing two proportions (the binomial test:

prop.test()) - correlating two variables (Pearson's or Spearman's rank correlation:

cor.test()) - testing for independence of two variables in a contingency table (chi-squared,

chisq.test(), or Fisher's exact test:fisher.test()).

Comparing sample means

normal errors: t.test()

A notched boxplot is useful for graphically showing the difference between samples.

A <- c(3, 4, 4, 3, 2, 3, 1, 3, 5, 2)

B <- c(5, 5, 6, 7, 4, 4, 3, 5, 6, 5)

t.test(A, B)##

## Welch Two Sample t-test

##

## data: A and B

## t = -3.873, df = 18, p-value = 0.001115

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## -3.0849 -0.9151

## sample estimates:

## mean of x mean of y

## 3 5if samples are linked in some way: paired t test

down <- c(20, 15, 10, 5, 20, 15, 10, 5, 20, 15, 10, 5, 20, 15, 10, 5)

up <- c(23, 16, 10, 4, 22, 15, 12, 7, 21, 16, 11, 5, 22, 14, 10, 6)

t.test(down, up)##

## Welch Two Sample t-test

##

## data: down and up

## t = -0.4088, df = 29.75, p-value = 0.6856

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## -5.248 3.498

## sample estimates:

## mean of x mean of y

## 12.50 13.38t.test(down, up, paired = TRUE)##

## Paired t-test

##

## data: down and up

## t = -3.05, df = 15, p-value = 0.0081

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## -1.4864 -0.2636

## sample estimates:

## mean of the differences

## -0.875non-normal errors: wilcox.test()

wilcox.test(A, B)## Warning: cannot compute exact p-value with ties##

## Wilcoxon rank sum test with continuity correction

##

## data: A and B

## W = 11, p-value = 0.002988

## alternative hypothesis: true location shift is not equal to 0Comparing counts or proportions

sign test

This is one of the simplest of all statistical tests. Suppose that you cannot measure a difference, but you can see it (e.g. in judging a diving contest). For example, nine springboard divers were scored as better or worse, having trained under a new regime and under the conventional regime (the regimes were allocated in a randomized sequence to each athlete: new then conventional, or conventional then new). Divers were judged twice: one diver was worse on the new regime, and 8 were better.

What is the evidence that the new regime produces significantly better scores in competition? The answer comes from a two-tailed binomial test. How likely is a response of 1/9 (or 8/9 or more extreme than this, i.e. 0/9 or 9/9) if the populations are actually the same (i.e. p=0.05)? We use a binomial test for this, specifying the number of 'failures' (1) and the total sample size (9):

binom.test(1, 9)##

## Exact binomial test

##

## data: 1 and 9

## number of successes = 1, number of trials = 9, p-value = 0.03906

## alternative hypothesis: true probability of success is not equal to 0.5

## 95 percent confidence interval:

## 0.002809 0.482497

## sample estimates:

## probability of success

## 0.1111comparing proportions: prop.test()

Requires success, totals

prop.test(c(4, 196), c(40, 3270))## Warning: Chi-squared approximation may be incorrect##

## 2-sample test for equality of proportions with continuity

## correction

##

## data: c(4, 196) out of c(40, 3270)

## X-squared = 0.5229, df = 1, p-value = 0.4696

## alternative hypothesis: two.sided

## 95 percent confidence interval:

## -0.06592 0.14604

## sample estimates:

## prop 1 prop 2

## 0.10000 0.05994independence of two variables via contingency tables: chi square test

Requires a matrix of values

count <- matrix(c(38, 14, 11, 51), nrow = 2)

count## [,1] [,2]

## [1,] 38 11

## [2,] 14 51Run the function on this matrix

chisq.test(count)##

## Pearson's Chi-squared test with Yates' continuity correction

##

## data: count

## X-squared = 33.11, df = 1, p-value = 8.7e-09chisq.test(count)$expected## [,1] [,2]

## [1,] 22.35 26.65

## [2,] 29.65 35.35What if the probabilities are unequal? We can specify our expected probabilities.

chisq.test(c(10, 3, 2, 6), p = c(0.2, 0.2, 0.3, 0.3))## Warning: Chi-squared approximation may be incorrect##

## Chi-squared test for given probabilities

##

## data: c(10, 3, 2, 6)

## X-squared = 11.3, df = 3, p-value = 0.0102We can use table() to generate our matrix (runif() is a random number generator we will discuss in a later section).

die <- ceiling(runif(100, 0, 6))

table(die)## die

## 1 2 3 4 5 6

## 19 16 18 18 15 14

chisq.test(table(die))##

## Chi-squared test for given probabilities

##

## data: table(die)

## X-squared = 1.16, df = 5, p-value = 0.9487Fishers Exact Test

x <- as.matrix(c(6, 4, 2, 8))

dim(x) <- c(2, 2)

x

fisher.test(x)B. correlation coefficients

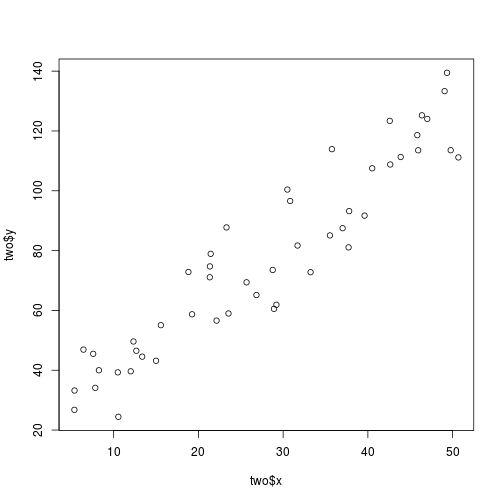

Are two samples are correlated?

two <- read.table("../data/twosample.txt", header = TRUE) # load some data

plot(two$x, two$y) # and plot it

plot of chunk twosamp

cor()

cor(two$x, two$y)

cor.test(two$x, two$y)

cor.test(two$x, two$y, method = "spearman")Kolmogorov-Smirnov test

- Are two sample distributions the same, or are they significantly different from one another in one or more (unspecified) ways?

- Does a particular sample distribution arise from a particular hypothesized distribution?

ks.test(two$x, two$y)##

## Two-sample Kolmogorov-Smirnov test

##

## data: two$x and two$y

## D = 0.7347, p-value = 4.22e-13

## alternative hypothesis: two-sidedC. Bootstrapping

We want to use bootstrapping to obtain a 95% confidence interval for the mean of a vector of numbers called values:

skew <- read.table("../data/skewdata.txt", header = TRUE)

names(skew)## [1] "values"We shall sample with replacement from values using sample(values, replace = TRUE), then work out the mean, repeating this operation 10,000 times using a loop, and storing the 10,000 different mean values in a vector called ms.

ms <- numeric(10000)

for (i in 1:10000) {

ms[i] <- mean(sample(skew$values, replace = TRUE))

} # end of loopThe answer to our problem is provided by the quantile() function applied to ms: we want to know the values of ms associated with the 0.025 and the 0.975 tails of ms

quantile(ms, c(0.025, 0.975))## 2.5% 97.5%

## 24.86 37.97So the intervals below and above the mean are

mean(skew$values) - quantile(ms, c(0.025, 0.975))## 2.5% 97.5%

## 6.106 -7.006